Open vs closed AI models – what does it mean and why does it matter?

Understanding the difference between open-source and closed AI models helps you make smarter choices for business, education, healthcare, and creative work.

Artificial intelligence has become impossible to ignore, especially with tools like ChatGPT now a routine part of work and life. But behind the scenes, not all AI is created—or shared—the same way. One of the biggest questions shaping the future of AI is whether these tools should be “open” or “closed.”

This debate influences who benefits, who can innovate, what’s possible, and even how much you can trust the answers you get. So, what does “open vs closed AI” really mean, and why should you care?

Why this matters to you

Whether you’re a teacher, a business manager, a healthcare professional, or a creative, the type of AI you use—open or closed—shapes what you can do with it. It determines how much you can trust its outputs, how flexible it is for your needs, and what risks you need to watch out for.

How open and closed AI models work

When people talk about open AI models, they’re referring to systems whose code, data, and often even model weights (the mathematical guts of the AI) are available for anyone to inspect, tinker with, and reuse. In practice, that means you (or your tech team) could:

Download the model and run it on your own hardware

Adapt it for unique needs

Check how it makes decisions

By contrast, closed AI models are like black boxes. They’re built and maintained privately, usually by a company that provides access through a web interface or app. Think of ChatGPT, which you interact with on OpenAI’s platform. You can use these tools, but you can’t see, edit, or fully understand the underlying machinery.

Everyday impact of open vs closed AI

This distinction isn’t just academic. Whether an AI model is open or closed affects your experience in important ways:

Transparency and trust: Open models let anyone check for bias or errors, making it easier for researchers and even curious professionals to hold the system accountable. Closed models rely on trust in the provider’s promises about quality and safety.

Innovation and customisation: With open models, you can tailor or improve the tool for your own sector or need—imagine a healthcare clinic adapting an AI to local patient data, or a school adjusting a teaching tool to fit the curriculum. Closed models limit that flexibility in favour of a polished, standardised product.

Security and safety: Closed models offer more control over misuse, data privacy, and user safety, since updates and monitoring are centrally managed. Open models may pose greater security risks if poorly understood or used without safeguards, but they also allow external experts to fix vulnerabilities quickly.

What my son’s music project taught me about AI

This all clicked for me during a conversation with my son, who’s experimenting with AI in his music projects. His debate with friends over open vs closed tools revealed the same tensions professionals face in business, education, and beyond. He was excited about using AI in their music projects, and his group faced a choice: use open-source tools like DiffRhythm or Spleeter, which anyone could remix and extend, or opt for closed, commercial platforms like SOUNDRAW or AIVA, which were easier to use but far less adaptable.

The discussion between classmates wasn’t just about features—it quickly became a debate about creative control, ownership, and how much they could learn from the tools themselves. It struck me that their experience with music AI was a microcosm of much bigger trends in technology. This is the same landscape we navigate with ChatGPT and many other AI tools.

Examples across different sectors

Consider the tools you use every day:

Business: Open-source CRM software lets companies customise and audit their customer data tools, while a closed HR system comes pre-packaged but can’t be easily changed.

Education: A school with an open-source learning management system (LMS) can tweak lessons and integrate local resources, while paid, closed systems require waiting for the next official update.

Healthcare: A practice management dashboard built on open data can be fine-tuned for local compliance. Closed electronic health record (EHR) software guarantees security and support, but makes it difficult to introduce new features or integrations.

Creative: You might remix and build on open-access music tracks or visual designs, while streaming services or design platforms limit editing and adaptation.

Pros and cons of open vs closed AI models - which is better?

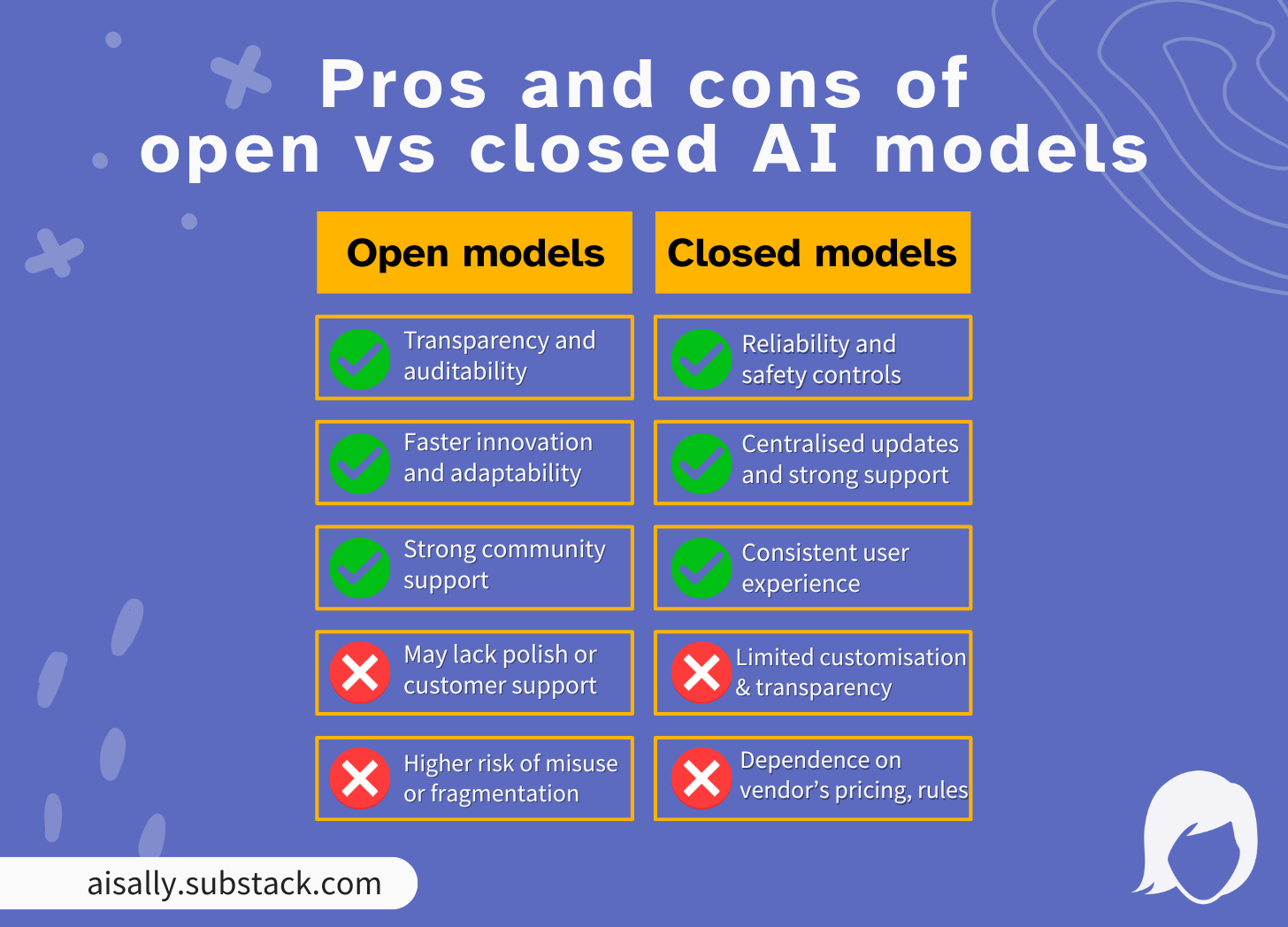

There’s no universal answer—open and closed approaches both have advantages and pitfalls.

Open models:

Pros: Maximum transparency, rapid innovation, adaptability, strong community involvement. They’re ideal for experimentation, research, and niche professional needs.

Cons: May lack the polish, support, and guardrails of commercial products; higher risk of fragmentation and, in some cases, misuse or security holes if not managed carefully.

Closed models:

Pros: Reliability, strong safety controls, centralised updates, and professional support. Easy for end-users, with consistent user experience across sectors.

Cons: Less customisable; you’re subject to the provider’s decisions regarding features, availability, and even pricing. Limited or no ability to audit or explain outputs deeply.

How this relates to ChatGPT

ChatGPT itself is a “closed” AI model. Developed and controlled by OpenAI, you access it via their website or apps rather than downloading and running it yourself. This approach offers real benefits: OpenAI can roll out safety features quickly, monitor use, and prevent widespread misuse. For most users—especially in healthcare, education, or business environments that demand reliability and privacy—a closed platform is often the safest and most practical choice.

However, the closed nature means you have to take some things on faith: you can’t inspect how the system’s trained, tweak it for unique local needs, or guarantee it fits your ethical or regulatory framework. You’re also limited to whatever features or safeguards OpenAI provides.

Meanwhile, a new wave of open AI models—like Meta’s Llama, Mistral, or even smaller music or art AIs—are being developed worldwide. Some schools, clinics, and firms are starting to experiment with these for cases where full transparency or deep customisation matters.

The reality? Most organisations will use a blend of open and closed AI tools—balancing the safety and polish of closed products with the flexibility and empowerment of open models.

Making the right choice for your needs

If you’re wondering what’s best for your work or team, ask yourself:

Is transparency and flexibility more valuable, or do you prioritise consistency and support?

Do you need to inspect, adapt, or deeply integrate the AI into your own systems?

Is it critical for your users or clients to know exactly how the AI is making decisions?

Do regulatory or privacy requirements in your sector demand control over data and methods?

Use open models when you need innovation, learning, or unique local solutions. Use closed models when safety, service, and reliability are paramount. In practice, many professionals find they need both—an open tool for classroom exploration, for instance, and a closed, supported one for official data reporting.

Final thoughts

The tension between open and closed AI models shapes everything from classroom curricula to creative freedom, business competitiveness, and patient care. As ChatGPT and similar tools continue to evolve, understanding this distinction empowers you to choose, shape, and trust the digital tools that are transforming your world.

Sometimes you want a product that “just works”—but sometimes, it’s worth asking what’s under the hood.

This piece wraps up our series on ChatGPT 5 and the bigger AI questions shaping how we use it. If you missed the earlier articles, I’d love for you to catch up on those—and if you found this series useful, please share it with a colleague or friend who’s exploring AI too.

🔗 ChatGPT 5 series: catch up on all articles

I’m exploring ChatGPT 5 in depth in this special AI Sally series. Here’s where you can dive in:

Overview → ChatGPT 5 has arrived: what you need to know

New capabilities → ChatGPT 5 new features: smarter reasoning, longer context, and a creativity boost

Safety features → Exploring the new safety features of ChatGPT 5

Free vs paid → Free vs paid – what’s different (and how to choose the right version for you)

Open vs closed models → You’re reading it now!

💡 Each article breaks down complex AI topics in plain language, with examples for business, education, healthcare, and creative work—so you can feel confident using AI in ways that truly help.