Meta Vibes: entering the age of AI-generated short video

How Meta’s new ‘Vibes’ feed is changing what creativity looks like — and what it could mean for professionals, brands, and everyday creators

It started with a short, looping clip that looked as if it belonged in a dream:, shifting styles mid-motion, the colours pulsing like music. The strange thing was, no one had filmed it. It was generated, created entirely by AI inside Meta’s new platform called Vibes.

Vibes is part experiment, part social feed, part glimpse into where online creativity might be heading. In this space, you don’t need a camera, a studio, or even editing software. You need an idea, a sentence, and a willingness to play.

What exactly is Meta Vibes?

Vibes lives inside the Meta AI app and on meta.ai, and every clip you see there is synthetic1. Some are built from scratch using text prompts; others are remixed versions of videos that someone else already made. You can change the style, tone, soundtrack, or colour palette with a few taps.

Meta describes Vibes as “a new way of creating and remixing AI videos”. The experience is deliberately open-ended: part TikTok, part experimental film lab. You scroll through a feed of endlessly regenerating video loops, each one a visual idea that can be copied, remixed, and reimagined by anyone who sees it.

And this is where the name Vibes really fits. It’s not about telling stories in the traditional sense. It’s about evoking moods, feelings, or aesthetics that exist for a few seconds before morphing into something else.

Where Meta is heading

Vibes isn’t just a novelty, it’s part of Meta’s larger plan to weave generative AI into every layer of its ecosystem. The company has already integrated its Meta AI assistant into Messenger, Instagram, and WhatsApp. Vibes takes that a step further: it’s a space where the content itself is AI-born.

Videos made in Vibes can be shared to Instagram or Facebook, blurring the line between user-created and AI-generated content. Meta is also experimenting with partnerships, including collaborations with companies like Midjourney and Black Forest Labs, to refine how realistic or artistic these AI videos can look.

Meta call Vibes an ‘early preview’ mode and is rolling it out over time. Availability may vary by region and app version2. But Meta has made it clear that it sees AI-driven creativity as central to its future. The shift from human-only content creation to hybrid human–AI collaboration isn’t just coming, it’s already here.

Why Vibes matters

The arrival of Vibes represents a profound shift in who gets to make video and how it’s made. Traditionally, video creation has required skill, time, and resources. Vibes removes most of those barriers. Anyone with a thought or feeling can bring it to life visually, often in seconds.

That kind of accessibility can be empowering. Imagine being able to prototype an advertising concept, an explainer video, or even a brand aesthetic in an afternoon. You don’t need production budgets or editing software, just an idea and curiosity.

But Vibes isn’t about polished outcomes. At least not yet. It’s about the spark before the polish: that early, messy, creative stage where people test, remix, and discover what’s possible. Meta seems to be betting that this culture of play will drive new forms of community engagement and keep people experimenting within its platforms.

A new kind of creative playground

Spend a few minutes scrolling through Vibes and you’ll see why it feels so different from traditional short-form video feeds. Some clips are hypnotic and poetic; others are random, chaotic, even nonsensical. But together, they reveal a new kind of creative language emerging, one defined not by filming reality, but by prompting imagination.

You can take someone else’s video and rework it — shift the colour tone, change the rhythm, or overlay new music. The result might be something completely different in mood and message. It’s creativity by iteration, not isolation.

For creators and professionals, this idea of remixable storytelling is powerful. It means campaigns, educational content, or even artistic projects could evolve in real time, shaped by community interaction rather than controlled production cycles.

With all that in mind, you might be wondering how to actually dive in. The good news is that Meta Vibes isn’t locked behind a wall of technical complexity — it’s surprisingly approachable once you know where to start.

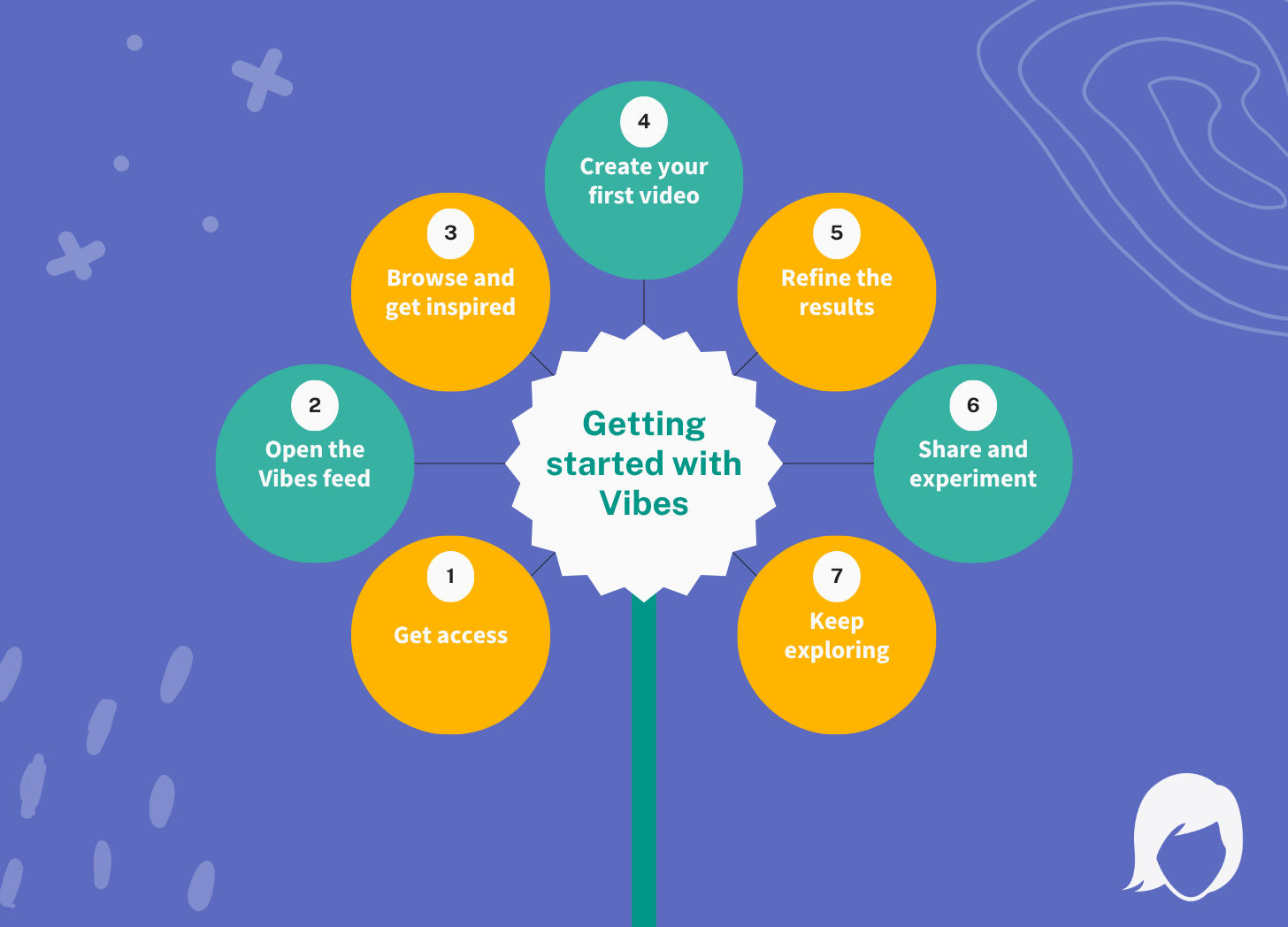

How to get started with Vibes

If you’re curious to try Meta’s new AI-first video platform, the good news is you don’t need any technical skills or filmmaking experience. Vibes is designed to feel more like a creative playground than a production studio. You can explore, prompt, remix, and share within minutes.

Here’s how to begin.

1. Get access

Download the Meta AI app or visit meta.ai in your browser. You’ll need to log in with your Facebook or Instagram account. (At the time of writing, Vibes is being rolled out gradually, so it may not yet be available in all regions.)

2. Open the Vibes feed

Once you’re in the app, look for the Vibes section — usually under “New chat”, “Create,” “Explore,” or its own tab. This is where the AI-generated video feed lives. You’ll see a mix of clips created from prompts, remixes, and other users’ experiments.

3. Browse and get inspired

Start by scrolling. The feed is full of visual styles, from abstract AI motion graphics to cinematic mini-scenes. Treat it like a moodboard. Notice what catches your eye: colours, textures, camera movement, transitions. These will help you refine your own prompts later.

4. Create your first video

You can start in two ways:

From scratch: Type a prompt (e.g. “A slow-motion shot of waves crashing under pink sunset light”). You can also upload a photo or short clip as a visual reference.

By remixing: Tap an existing video in the Vibes feed and choose Remix. You can change its style, tone, or soundtrack to make it your own.

5. Refine the results

After generating, Vibes usually offers a few options. Pick the one that feels closest to your idea and tweak it. Adjust colours, effects, or motion. If your clip feels static, try the Animate function to turn it into a moving scene.

6. Share and experiment

Once you’re happy with the result, share it straight to the Vibes feed, or cross-post to Instagram or Facebook Reels. You can also send your creations privately via DMs. If you find an AI video you like on Instagram, there’s often an option to open it in Meta AI and remix it directly.

7. Keep exploring

Like most AI tools, Vibes learns from your behaviour. The more you prompt, remix, and interact, the more the feed adapts to your style. Treat it as an ongoing experiment - part creative lab, part social network.

The setup takes minutes, and once you’ve had a taste of what it feels like to shape your surroundings in real time, it’s hard not to imagine where this could lead next. Beyond the novelty, Vibes hints at deeper shifts in how we experience presence, productivity, and connection in digital spaces.

What professionals should watch for

If you’re a marketer, designer, or educator, it’s worth paying attention to Vibes; not necessarily to publish there yet, but to observe what’s happening. The platform is an early indicator of how AI could transform both the look and logic of social video.

Imagine being able to generate test visuals for a campaign, try multiple aesthetic directions, and gauge responses before a single dollar is spent on production. That’s not far-fetched. Tools like Vibes could eventually integrate with ad testing, audience targeting, or creative analytics inside Meta’s existing ad ecosystem.

For solo creators and small businesses, Vibes might become a low-barrier entry point for experimenting with visual identity. You can try ideas that would normally be out of reach, such as cinematic looks, stylised effects, surreal scenes, and see what resonates with your audience.

But, as with all new tools, the early excitement comes with caution.

The messy side of AI video

Meta’s experiment isn’t without controversy. Early users have pointed out that much of the feed feels chaotic — a stream of low-effort AI content that some critics have dubbed “AI slop”345. Because anyone can generate and remix instantly, the overall quality can be uneven.

Then there’s the question of authenticity. When every clip is synthetic, how do viewers know what’s real? Do they care? And what happens to our sense of trust if every person and brand starts producing entirely artificial imagery?

There are also bigger ethical questions. Remixed content blurs ownership boundaries. If you take someone’s video, add your prompt, and publish it, who owns the result? And how should consent work if AI models begin referencing real people or likenesses in their training data?

For now, Meta says it’s building moderation and transparency tools into Vibes, including labelling to indicate that content was AI-generated. But as the platform scales, the line between playful remixing and problematic reuse will likely get thinner.

The opportunity, and the challenge, for professionals

The biggest opportunity with Vibes isn’t about perfect visuals. It’s about speed and experimentation. It gives professionals and brands a place to think visually before producing anything tangible.

A marketing team could prototype mood films for an upcoming product launch. A teacher could generate quick visual metaphors to explain a concept. A small business owner could explore tone and style before committing to a full video shoot.

The challenge will be balancing innovation with responsibility. If AI-generated content becomes indistinguishable from reality, then consent, authenticity, and credibility become fragile. Professionals will need to build their own guardrails. Not just around how they use these tools, but how they label and communicate the results.

What to do now

If you have access to Vibes, it’s worth exploring. Not to post professionally polished content, but to understand how prompting translates into visual storytelling. Notice what kinds of styles or ideas the feed is surfacing. Observe what audiences engage with.

If you don’t yet have access, you can still prepare. Start drafting internal guidelines for how your team or organisation will handle synthetic visuals. Think about transparency, tone, and quality control. These discussions will soon be standard parts of creative workflows.

And if you’re simply curious, play. Type a phrase, watch it come alive, and ask yourself what this kind of tool might make possible in your work or creative practice.

Looking ahead

For AI Sally readers who’ve already explored my recent piece on Sora, OpenAI’s cinematic-style video generator, you’ll recognise that we’re standing at a turning point in visual media.

Where Sora aims for realism and emotional depth, Vibes tilts toward spontaneity and shared remixing. They’re different expressions of the same larger trend: the merging of human creativity and machine capability.

In my next post, I’ll bring those two together, exploring how Sora and Vibes illustrate two distinct futures for AI-driven video: one rooted in craft and storytelling, the other in play and participation.

For now, Vibes offers a fascinating preview of what happens when everyone, not just professionals, can create in motion. Whether it evolves into a meaningful new medium or just a fleeting experiment, it’s a sign of where creativity is heading: faster, freer, and more fluid than ever before.

Meta. Introducing Vibes: A New Way to Discover and Create AI Videos, 25 September 2025.

Actualidadgadget. Meta introduces Vibes, the AI-generated short video feed within its app, 30 September 2025.

TechCrunch. (Malik, Aisha). Meta launches ‘Vibes,’ a short-form video feed of AI slop, 25 September, 2025.

The Guardian. (Milmo, Dan). Cute fluffy characters and Egyptian selfies: Meta launches AI feed Vibes, 27 September 2025.