How to discuss AI in job interviews

What applicants should share, what employers should ask, and how to avoid red flags

The emergence of ChatGPT and other AI tools has shifted the landscape not only of job interview preparation, but of everyday work and classroom expectations. For both applicants and employers, and equally for educators and students, the challenge today is to navigate the evolving line between helpful assistance and unearned advantage, with policies and attitudes still catching up to the rapid pace of change.

The class that changed overnight: academic integrity, suspicion, and AI

A few years ago, while teaching at a local university, I experienced first-hand how quickly AI could challenge our traditional notions of authenticity and academic achievement. Week after week, I’d provide careful feedback on a student's consistently struggling written assignments, directing him toward tutoring and support. Then, one week, everything changed: his submission was polished, fluent, almost unrecognisable in both structure and tone.

I felt almost cheated, even a little insulted. Did this student think I wouldn’t notice such a dramatic shift? At the time, university policy was clear: no AI was permitted in student submissions. But without evidence, all I could do was address the entire class, reminding them that under our guidelines, only 100% original work, without AI assistance, was acceptable. That experience drove home just how complex the question of AI integrity had already become.

This classroom vignette echoes a broader tension seen in recent studies and policy updates. In 2024, Australian higher education oversight bodies released updated risk assessments of generative AI, highlighting challenges in detection, staff preparedness, and the need for institutional policies that both uphold integrity and recognise the pervasiveness of new technology. Many instructors now balance suspicion and pedagogy, navigating uncertainty as declarations of AI use, process-based assessment, and renewed conversations about learning outcomes become the new norm.

Beyond preparation: everyday AI in the modern workplace

This tension isn’t limited to academia; it plays out daily in business settings too, though often in far more positive ways. Take a friend of mine, a marketing manager at a major Australian brand, who credits her ability to meet the demands of her role to her team’s deliberate and enthusiastic adoption of AI. She’s designed a custom AI persona for the team, ensuring messaging consistency and accelerating everything from campaign brainstorming to final drafts.

Far from encountering skepticism, her whole team has embraced the productivity boost: for them, using AI isn’t a shortcut, but a critical, creative edge. Studies of marketing and creative teams back this up, showing that integrating generative AI can improve productivity, quality, and even team collaboration when tools are chosen and guidelines are clear.

Her example illustrates a key finding from recent business case studies: when AI is introduced with clear purpose and team buy-in, resistance is often minimal and results are transformative. In fields like marketing and technology, where content and ideas need to flow rapidly, the value of AI as a collaborative partner and productivity enhancer is hard to overstate.

How to discuss AI use in job interviews

As a result, job interviews are undergoing their own cultural transformation. Employers today expect applicants to speak not only about their interview prep, but about how they use AI in actual work. For applicants, this means coming to the table ready to answer nuanced questions such as:

How do you use AI in your day-to-day workflow?

Where do you draw the line between AI suggestion and your own expertise?

What safeguards do you use to protect privacy and ensure accuracy?

A strong answer might sound like this:

“I use ChatGPT to draft first versions of reports and emails, but I always review and edit them to match the tone and context of my audience. For data-related tasks, I’ll ask AI to structure or summarise information, but I double-check the facts and never enter confidential details into a public model. For me, it’s a way to save time on repetitive work while focusing my energy on strategy and decision-making.”

Applicants who frame their AI use in this way, balancing efficiency with judgment, show employers the adaptability, resourcefulness, and ethical awareness they value most.

Employer expectations: talking about AI in interviews and daily work

On the employer’s side, the expectations have evolved as well. There is still some anxiety about authenticity. Multiple cover letters that sound uncannily alike, for example, can flag over-reliance on AI. But leading companies now focus less on just catching AI usage, and more on setting clear, realistic guidelines for its responsible integration.

In interviews, this means crafting open-ended questions that invite candidates to share both the successes and challenges of AI in their workflow. Employers might ask:

What are your rules for inputting company data into AI systems?

Have you ever faced a privacy concern or mistake with generative AI? How did you handle it?

Can you point to a project where AI made the difference, and another where it fell short?

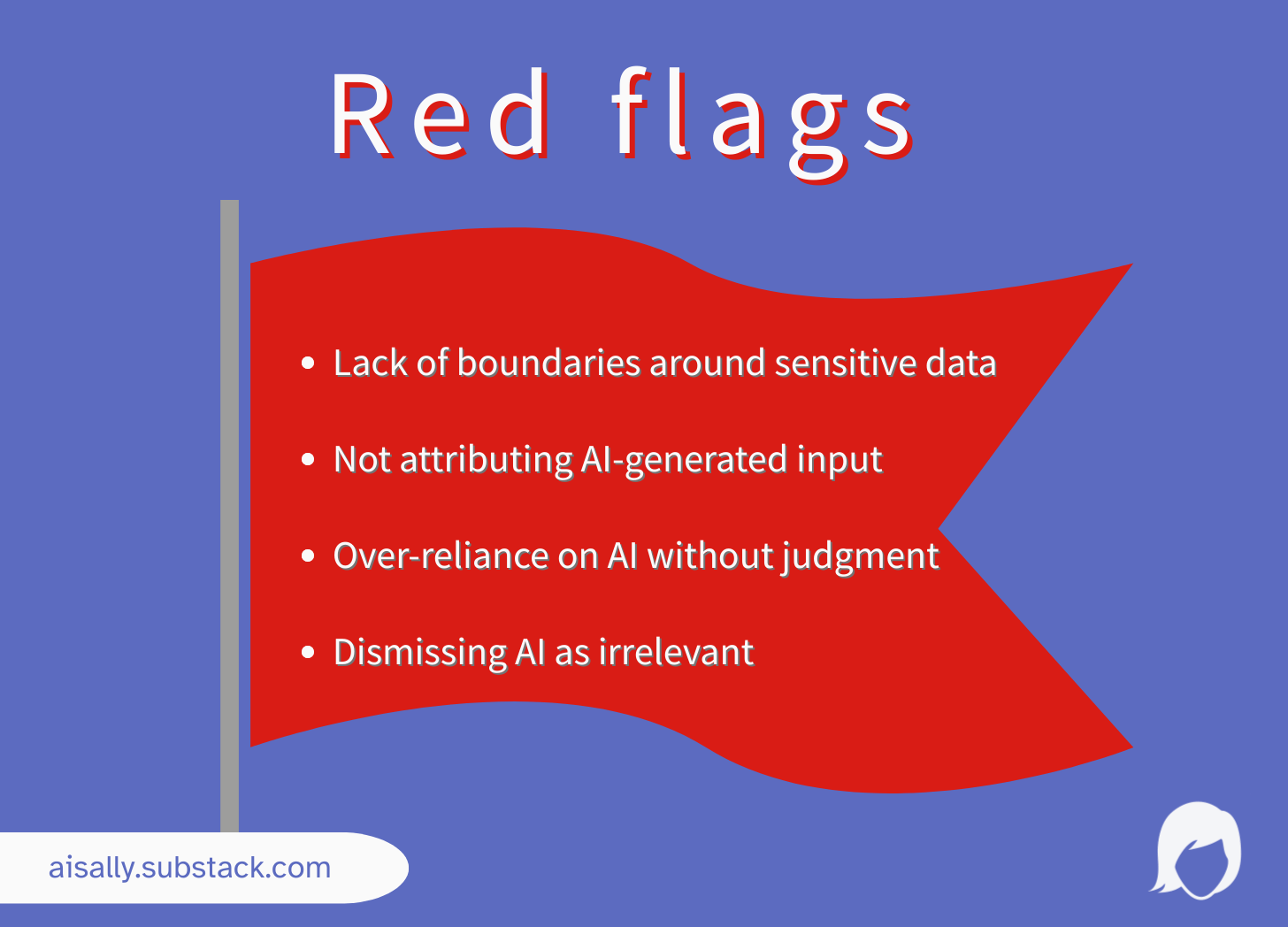

Employers should also be alert to red flags, such as candidates who:

Can’t explain how they fact-check or edit AI outputs.

Present AI-generated work as entirely their own without attribution.

Dismiss AI outright as “irrelevant” for the role, especially in industries where adoption is growing quickly.

These conversations surface not just technical skills, but also ethical awareness, proactivity, and alignment with organisational policy—qualities that recent workplace studies identify as increasingly vital for future-ready teams.

Evolving policies: integrity and innovation

Both educational institutions and businesses are scrambling to keep policies relevant. In universities, the focus is shifting toward process-based assessment, transparent AI use declarations, and aligning outcomes with authentic learning.

In businesses, leaders are updating policies, requiring training, and fostering a team culture where “AI literacy” means knowing the boundaries - never sharing confidential information with public tools and always checking outputs for errors, bias, and completeness.

Globally, approaches differ: the European Union is moving forward with AI-specific regulation, while Australia and the US currently rely more on organisational policies and professional standards. Regardless of region, the trend is clear: responsible AI use is quickly becoming a core workplace competency.

Finding the balance: human judgment, team buy-in, and ongoing adaptation

Looking back, my classroom experience highlights the discomfort of policy lag, of feeling a step behind the technology. Yet, my friend’s success in marketing shows that with clear protocols and wholehearted adoption, AI can enrich, not undermine, our work.

The lesson across sectors is the same: authenticity and integrity matter, but so do openness, adaptability, and a willingness to talk about where the human ends and the AI begins.

For applicants and employers alike, and for students and instructors, the future isn’t about banning or blindly adopting AI, it’s about having conversations, setting expectations, and adapting together as norms continue to evolve. If you’re preparing for interviews, start by reflecting on how you already use AI in your daily work. That self-awareness alone will help you tell a more authentic story when it matters most.

👉 Curious about using AI more effectively in your role? You might enjoy my article on how to build your own AI assistant with ChatGPT, which offers a hands-on way to put these ideas into practice.